I Made The World’s Best In-Memory Database But Forgot to Tell Anybody

What Now?

I’ve spent the past half decade creating the world’s best in-memory database, but I forgot to tell many people about it. Whoops.

Now here’s all the details of the best database ever.

TOC

- Top Features

- Architecture

- Data Processing Internals

- Data Structure Internals

- More Features

- What It All Means

Top Features

scalable architecture from 1 to 1,024+ cores per server

legacy memcached protocol and command support

legacy redis protocol and command support

most efficient in-memory database with smallest memory footprint ever

- database uses dozens of custom maximally efficient data structures

- every data structure is optimized to have no wasted bytes (and often not even wasted bits)

- we have 90% less memory overhead than legacy memcached1 and 85% to 95% less memory overhead than legacy redis

full TLS support

- TLS decryption of requests runs on different threads than TLS encryption of replies. reads and replies happen concurrently.

nested namespace support

- namespaces can be used as security boundaries

- nested namespaces can hold any value type

- all nested elements can have independent expirations

- nested namespaces act as key prefix compression too (since storing many sub-keys under parent keys won’t duplicate the parent key for each nested child key)

legacy redis reply syntax can be upgraded to deliver native json or python formatted replies

full virtual network hosting support

- each network can restrict clients into a private namespace not visible to other networks

- each virtual network can have its own TLS configuration

- each virtual network can set a default reply syntax (regular, json, python)

- each virtual network records independent usage details in the stats output

- each virtual network can specify access restrictions for clients (admin, stats, read, write)

server stats output is json formatted with ability to report partial sections for faster metrics

logging happens out-of-process to not block data access

Carrier Cache and Carrier DB

Carrier Cache replaces legacy memcached servers.

Carrier DB replaces legacy redis servers and also supports legacy memcached protocol2.

Both servers are built using the same architecture and code internals. Carrier Cache is a more streamlined in-memory cache implementation since it doesn’t need any of the extra multi-data-structure processing code, so many features are simply commented out. Carrier DB is the full implementation with all features available at configure time and runtime.

We use Carrier DB throughout this page to mean both Carrier DB and Carrier Cache unless otherwise specified.

Architecture

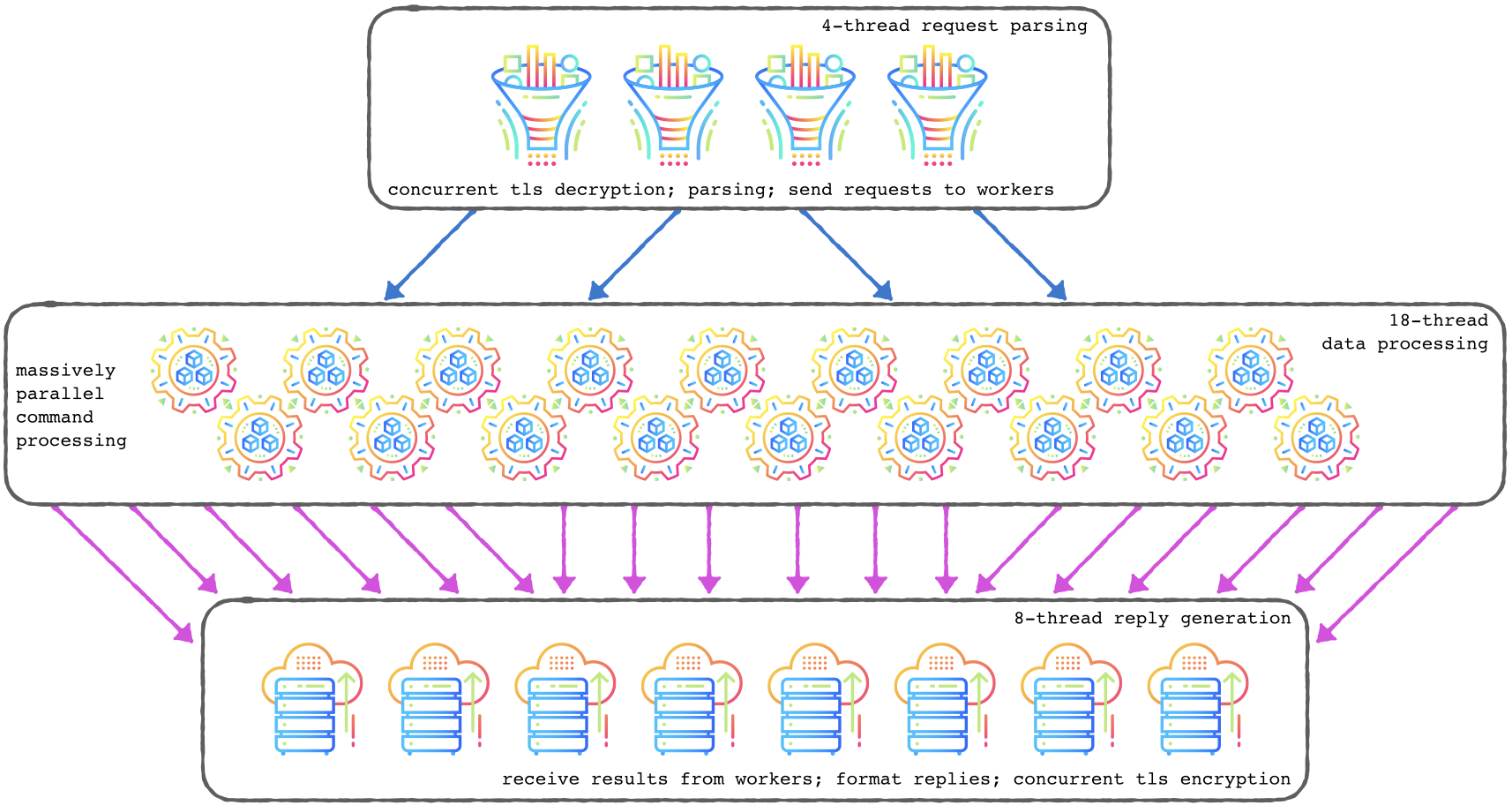

Let’s look at Carrier DB’s novel massively parallel architecture first. You can configure any number of threads at each level of processing to maximize your performance and throughput so none of your hardware investment goes to waste.

Below is a basic Carrier DB configuration with:

- 4 inbound parser threads (also handles concurrent tls decryption)

- 18 worker threads

- 8 replier threads (also handles concurrent tls encryption)

Dataflow Diagram

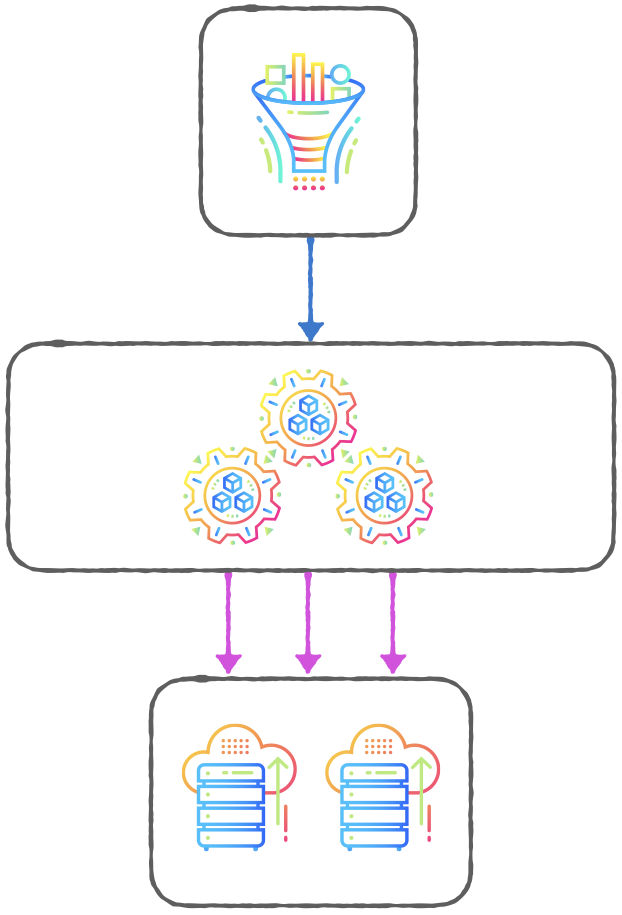

We could just as easily configure a smaller system with one reader thread, three data processing threads, and two reply threads:

Carrier DB can scale up to hundreds or thousands of cores or scale down to laptop cores—we can do it all with Carrier DB and Carrier Cache.

Carrier DB works great for giant servers or tiny IoT/CPE. Scale up to 256+ cores and 6 TB RAM or scale down to individual IoT deployments. In both cases you need to maximize your return-on-RAM investment by using the most efficient data platform possible.

Data Processing Internals

How does Carrier DB allow massively scalable data structure processing?

We spent a few months studying existing high performance systems like the internals of Erlang (beam), SQLite (vdbe), LuaJIT, FreeBSD, etc until the answer was obvious: we needed to design and create a new VM specifically designed for in-memory, zero-overhead data processing.

Carrier DB’s internal zero-overhead vm (zvm) can be described much like the Erlang VM. As Erik Stenman describes Erlang’s BEAM VM, we can also describe Carrier DB’s zvm:

It is a garbage collecting, reduction counting, virtual, non-preemptive, directly threaded, register machine.

zvm is Carrier DB’s custom VM created by combining design concepts from Erlang and SQLite with some LuaJIT data portability sprinkled throughout.

Command Processing Lifecycle

Let’s walk through a Carrier DB command from start to finish.

- [User] Connect to Carrier DB

- Your connection is assigned to one of the threads in the inbound parser thread pool

- [User] Send a command or commands (one of P parser threads)

- Your parser thread reads and parses your command, potentially decrypting TLS too

- Parser thread sets any metadata needed

- Parser thread configures any namespaces requested

- Parser thread checks for command ACL restrictions

- Parser thread spawns one or more zvm processes for your command

- Parser thread sends your zvm processes to worker threads for execution

- [System] Run a command (one or more of D data worker threads)

- Worker zvm thread wakes up and pulls a zvm process of its inbound work queue

- zvm thread begins executing opcodes in the process until one of:

- process succeeds with a return value

- process fails with an error to return

- process opcode run count (reductions) becomes too high, so the zvm will pause the current process and yield to the next process in the inbound work queue

- when the zvm process reaches an exit condition (success/failure/exception), the result is sent to a reply thread

- [System] Reply to user (one of R reply threads)

- Reply thread loads the zvm process result and needs to check:

- is this reply in the proper order?

- for legacy redis replies, each reply is expected in the order the command was generated. if multiple requests are in-flight and a later zvm process completes faster than earlier zvm process, we must pause returning for this client until the next expected result is available (i.e. replies for client are head-of-line blocked)

- if reply is in proper order, continue with reply

- for legacy memcached replies, each reply includes the key of the request. the base legacy memcached protocol was designed to be highly concurrent and efficient for multi-threaded servers. replies can be sent in any order as rapidly as they are generated.

- is this reply in the proper order?

- Reply thread formats reply as needed for protocol (legacy memcached replies are different than legacy redis replies, and we also allow json and python formatted replies dynamically)

- Reply thread encrypts reply to current client tls session if necessary

- Reply is sent to client

- Reply thread cleans up the zvm result by recycling the zvm process. memory cleanup is simple since all resources used by this result are contained in a single zvm process. when we clean up the zvm process, all dependent resources get cleaned up all at once as well.

- Reply thread loads the zvm process result and needs to check:

- [User] Receive Reply

All those steps look busy and complicated and time consuming, but also remember it’s across three layers of concurrency with each layer having its own defined width of parallelism, and combined with Carrier DB’s underlying most-efficient-ever data structures, replies get returned faster than you’d expect.

A feature you may have missed in the indented wall above: we implement full cooperative multi-tasking via reduction counting. Carrier DB is designed to keep your entire data platform responsive even when large data operations need to happen in production. When a zvm process reaches its opcode reduction count, zvm pauses the zvm process, rotates the zvm process to the end of its work queue, then the zvm loads the next process. When the paused zvm process is dequeued again, it wakes up and continues where it left off3.

Because we’re using a vm-based approach, each command is implemented as a series of opcodes4 which helps reduce the amount of code duplication needed for basic command operations.

Data Structure Internals

Great, so far we have massively multithreaded inbound request handling, data processing, and outbound data processing, but what data is being processed?

It’s time to introduce Carrier DB’s Most Advanced Memory-Efficient Data Structures In The World5.

Carrier DB was designed from data structure first principles. Unlike other in-memory databases, Carrier DB has a fully independent data structure library decoupled and not tied to the implementation of the server at all. There’s no cycle in the dependency graph between the Carrier DB server runtime and the data structures it uses.

We call our best-in-the-world decoupled data structure library

datakit.

datakit

datakit is currently ~60,000 source lines of code just

containing first-party, non-dependency code.

datakit implements memory-efficient:

- arrays (multiarray: small, medium, full)

- typed flat buffers (linear fully typed forward-reverse list)

- typed lists (multilist: small, medium, full)

- typed key-value maps (multimap: small, medium, full)

- atom allocation system (reference counted, flat storage)

- optimized 84-bit integer set storage with fast AVX2 intersection

- bloom filters (regular and counting)

- accelerated hyperloglog

- linear timer maps

- enhanced custom double-to-string printing

- various data helpers included/improved from LuaJIT and SQLite

- and more!

What makes datakit a kit? In

datakit, every data structure input and output is unified

under the same type system.

Every data structure in datakit can fully round-trip

input from user input to more efficient in-memory storage types (byte

reduced integers, doubles->non-lossy-floats,

floats->non-lossy-bfloat16, etc) and back to user input again via an

efficient type-unified API.

Full round-trip native storage types supported by

datakit include:

- unsigned 64 bit integers

[0, 18446744073709551615]

- signed 64 bit integers

[-9223372036854775808, 9223372036854775807]- all 64-bit integers are reduced to width only needed for their absolute value (1 to 8 bytes)

- unsigned 128 bit integers

[0, 340282366920938463463374607431768211455]

- signed 128 bit integers

[-170141183460469231731687303715884105728, 170141183460469231731687303715884105727]- all 128-bit integers are reduced to either 96-bits (12 bytes) or stored as full size (16 bytes)

- 8 byte double/binary64

- 4 byte float/binary32

- 2 byte half-float/bfloat16

- 2 byte half-float/binary16

- all real types are reduced to minimal width if there’s no value loss when converting smaller

- true/false/null

- empty values

- unicode and binary strings of any length

- and for internal use: pointers stored as full 64-bits or reduced to 48-bits

The unified type system of datakit is what powers

Carrier DB’s ability to accept any data type as any input without

needing multiple commands for different inputs. For example, Carrier DB

INCRBY auto-detects the increment argument type and accepts

the increment value as any of: signed 64-bit integers

[INT64_MIN, INT64_MAX], full unsigned 64-bit

integers [0, UINT64_MAX], and

binary64/doubles.

Next up: let’s look at some interesting components inside

datakit.

multiarray

multiarray sounds simple enough. It’s an array… of

multi.

multiarray is the first efficiency approach we use to

avoid pointer bloat in our modern data structures. We don’t use linked

lists anywhere in any of our data structures. We do

not pay the linked list 8-16 bytes per element overhead. We are better

than pointer hopping. We only use linear multiarray

instances for tracking sequential positions.

A multiarray has three representations inside one

container API:

multiarraySmallmultiarrayMediummultiarrayFull

Even though there are three concrete implementations (small, medium,

full), all access uses a single multiarray() API which

knows how to access the current type as well as how to grow the

underlying multiarray from small to

medium to full when an insert causes an

existing multiarray to exceed its allowed size limits.

What’s inside each multiarray level though?

multiarraySmall is just an array. One array. Example:

you want to store 1,024 pointers taking up 8 KB. That’s a good size for

a multiarraySmall.

But what if you wanted to store 6,000 pointers? Now you’re up to 48

KB and may want more efficient access by splitting one large array into

many smaller arrays each with more ideal add/remove performance. The

multiarray API will automatically upgrade your

multiarraySmall to multiarrayMedium.

multiarrayMedium is an array of arrays, so at this level

you’re safe up to about 1,024 arrays of 1,024 pointers each (1 million

pointers).

But what if you want to store 7 million pointers? Say hello to

multiarrayFull. multiarrayFull is, because we

want to be maximally memory efficient: a forward-reverse xor

linked list of multiarrayMedium instances. This

forward-reverse xor linked list in multiarrayFull is the

only linked list type used in datakit data structures.

With multiarrayFull, you now have unlimited storage,

retrieval, efficient updating, and efficient deleting of fixed-sized

elements in arrays while using the least physical overhead possible and

maximizing performance (plus, no pointer hopping—or at worst, pointer

hopping amortized by one linked list hop per 1 million elements).

All upgrades between multiarray levels

(small -> medium -> full)

get handled automatically by a single wrapper API of

multiarray() which knows the current container type, the

maximum size of the current container, and how to upgrade to the next

container level.

flat buffer

datakit’s flat buffer is another secret weapon in the

war against per-element pointer bloat.

Our flat buffer implementation automatically reduces full-size user input to one of 30 compact in-memory representations. The flat buffer add/update API guarantees all data added uses the least memory possible while still being efficient for quick data access.

Since datakit’s flat buffer is, well, a flat

buffer, it has limited growth possibilities. Inserting to or deleting

from large linear flat memory allocations becomes inefficient above a

few dozen KB.

We avoid the inefficiency of inserting/deleting inside linear memory

blobs by creating multilist and multimap

interfaces which chunk flat buffers into multiple smaller instances.

Accounting for many flat buffers at once requires more clever tracking,

but we retain maximal memory efficiency of using fully typed flat

buffers while also enjoying ease-of-use of individually addressable

elements.

multilist

Much like multiarray, multilist has three

forms:

multilistSmallmultilistMediummultilistFull

The entire multilist hierarchy has one unified API

capable of converting between the levels automatically.

multilist is one (small), two

(medium), or many (full) typed flat buffers

used as a list data structure.

Organizing around a three tier system guarantees small data stays small, medium data can grow a little (as a treat), and full sized data can grow as large as the system allows, all while still using the most efficient storage system ever created6.

The three tiers helps to maximize storage efficiency because small data doesn’t automatically instantiate large accounting overhead. Small data remains small. Nobody wants to create a small list of 2 integers on a server only to have the server secretly allocate 300 bytes of accounting overhead. By having three levels of accounting, we can store small data in small space, medium data in more space, and save the power of full expansive data structures for large data where the overhead can be amortized over hundreds or thousands of values.

multimap

Much like multiarray and multilist,

multimap has three forms:

multimapSmallmultimapMediummultimapFull

The secret of multimap is: how do we convert flat fully

typed binary buffer key-value pairs into viable hash-table-like

usage?

How do we index keys inside one or more flat buffers when their memory addresses change every time any updates happen to them?

We found a way. Welcome to the magic of Carrier DB.

More Features

Multi-Network Hosting

Carrier DB supports listening on an unlimited number of custom networks.

Each network can be individually configured for TLS, ACLs, startup helpers, namespaces, and protocol defaults.

ACLs

Simple per-listening-network access restrictions secure your data exactly as you intend.

Each access level restricts all inbound connections to one or more permissions of:

- admin — connections can do admin tasks (reload certs, shutdown, change log level)

- stats — connections can only run stats (no reads or writes of any data keys allowed)

- read — connections can only read data, no writes

- write — connections can only write data, no reads

TLS

Each virtual network supports using both RSA and ECDSA certs at the same time and TLS can be configured per custom virtual network.

Notify-on-Startup

Each virtual network also supports running one program on startup. The program receives arguments in this order:

networkName— name of the network in your confignetworkHost— host of the network in your confignetworkPort- listening port of the network- if you ask Carrier DB to use a random listening port on startup, the

listening port chosen is provided as

networkPort

- if you ask Carrier DB to use a random listening port on startup, the

listening port chosen is provided as

networkIsTLS- string “tls” if tls, else “notls”networkProtocol— protocol for network (dmp for legacy data memcached proto, drp for legacy data redis proto)

Notify-on-Startup scripts are useful to update a central service with available Carrier DB server details for discovery or to add dynamic monitoring.

Notify-on-Startup scripts can also be used to pre-load static datasets into your server automatically on startup.

Namespace Per Network

Each virtual network can specify a default namespace for all connected clients.

Network namespaces can be either be regular (where clients can escape higher) or locked (where clients can’t escape higher).

Clients can always create deeper namespaces, but locked namespaces can’t be escaped above the level of the most recent lock.

The config namespace parameter takes an exact namespace command as

would be sent to the server by a client.

Port Config

Carrier DB doesn’t have a default port. By default, Carrier DB picks

a random unused port for listening. You can specify your own listening

port on a per-network basis, or you can specify port 0 and

Carrier DB will pick a random unused port.

When using a random port for listening, you can specify a Notify-on-Startup script to tell your infrastructure about the chosen Carrier DB service port.

Network Config Example

Here’s a config example of a server listening on four ports with one

port for TLS, one port for admin access with default json

replies, one port for stats-only access, and one port locked to a

namespace security boundary.

network basicNetwork {

host localhost

port 7780

certchain /etc/ssl/server-rsa.pem

privatekey /etc/ssl/private/private-key-rsa.pem

certchain /etc/ssl/server-ec.pem

privatekey /etc/ssl/private/private-key-ec.pem

enabletls yes

access "stats read write"

protocol drp

}

network adminNetworkWithStatsDefaultJSONReply {

host localhost

port 7781

access "admin stats read write"

protocol drp

syntax json

}

network statsOnlyNetwork {

host localhost

port 7782

access "stats"

protocol drp

}

network securityNamespace {

host localhost

port 7783

access "read write"

protocol drp

namespace "nslock ns 1 2 3 4"

}JSON Stats

Carrier DB (and Carrier Cache, which is built on Carrier DB) return all stats details as nicely formatted JSON. The JSON stats output for Carrier DB and Carrier Cache is documented in the Carrier Cache Tech Specs JSON Stats section.

The stats JSON can be returned all at once, or if you only need to record certain values for monitoring, you can reduce the stats generation overhead by requesting a minimal subset of sections using one or more of:

- license

- process

- cpu

- memory

- startup

- os

- log

- keyspace

- network

Massively Parallel Data Processing

You can configure Carrier DB’s multi-threaded processing in your config file with three easy settings.

Here’s a configuration using 256 threads total for your entire database server:

runtime {

protocolParserInstances 32

dataWorkerInstances 128

networkReplierInstances 96

}The three runtime config settings control:

protocolParserInstances— threads for TLS decryption and command parsingdataWorkerInstances— threads for processing data (each thread creates an independent ZVM event loop)networkReplierInstances— threads for formatting replies and TLS encryption

If you don’t specify a runtime thread topology, Carrier DB will detect your core count and assemble a reasonable configuration at startup.

Certificate Reloading

TLS certs support live reloading using the ADMIN TLS RELOAD

command (if your network has admin permission).

Carrier DB also uses TLS tickets with automatic ticket rotation every couple hours to balance encryption reconnection performance with security.

Build Infrastructure

One secret feature of Carrier DB and Carrier Cache is a scalable multi-platform, multi-target build infrastructure.

All dependencies inside Carrier DB use CMake as the build system because CMake has multi-project-aware dependency graph tracking. We never have to worry about misconfigured poorly hand-written makefiles even across a dozen dependencies. Everything Just Works™.

For external dependencies we didn’t create ourselves, we either convert their build systems to CMake or update their included CMake files to conform to our expected naming conventions and object file generation policies.

Also, by using CMake as a unified 30-dependency build system, we can easily generate one static binary at the end of our build step with coherent optimization levels (or debug levels) propagated inside every dependency with one simple global config option.

Since Carrier DB uses compact binary typed linear flat buffers as a primary data structure, we need to take advantage of both compiler optimizations and CPU hardware optimizations to achieve the most performance possible. We target all recent CPU micro-architectures with independent hardware-optimized builds.

One full release of Carrier DB and Carrier Cache requires building the entire platform about 60 times: one for each modern CPU microarchitecture, for each of Carrier DB and Carrier Cache, then all of those combined across both Linux and macOS.

With our current parallel build infrastructure across all build targets, it takes about 3 hours for a complete release build containing all multi-target, multi-platform binaries.

If we are building our database 60 times, how do users know which binary they should run? Or, what if users have 3 different CPU generations across their server farm? Which server picks which binary? How do server binaries match to server deployments?

To solve the “one product with 24 binaries” problem, we created a

single micro-architecture dispatcher binary to use as the primary

Carrier DB or Carrier Cache command7. You run the dispatcher

carrier-db or carrier-cache binary, it

immediately queries your CPU µarch, then loads the proper

carrier-db-[µarch] binary automatically (all

platform binaries are present in the same directory bundle so the

correct one can be selected at runtime).

What It All Means

Carrier DB and Carrier Cache are the most efficient and most modern in-memory database systems available. In fact, Carrier DB and Carrier Cache are the most efficient in-memory databases possible to ever create.

So, where’s the open source? There is no open source! These databases include work I originally researched starting in blocks between 2014, 2016, and ideas even back from 2010 when I was running memcached on production sites. These products are the result of 6+ years of development and 15+ years of production experience. Sorry, but “give it away for free” doesn’t compute. More efficient RAM usage at scale is directly translatable into monetary savings. Given in-memory cache servers have a long product lifetime (modern memcached started ~2003, so 17+ years so far), the integration of cost savings over a product’s innovation lifetime is immense when goals are maximizing RAM optimizations. Carrier Cache and Carrier DB can save dozens of billions of dollars in RAM usage over the years when deployed at scale8.

You can download Carrier DB trial or Carrier Cache trial for evaluation. Carrier Cache only supports the legacy memcached interface while Carrier DB supports both legacy memcached and legacy redis interfaces9. The trials are fully functional, but they exit after a random 2-6 day timeout to enforce a “maximum uptime per process” trial period. If you can handle full cache evictions randomly, you could place the trial servers under supervision then run them forever just with auto-restarts every 2-6 days.

Both Carrier DB and Carrier Cache are production ready, but Carrier DB has a “beta” label because features may still be refined in future releases.

The product rate for licensed Carrier Cache and Carrier DB is $5,000 per server per year. Additional support rates are based on response time needs and number of total sites deployed across. Contact sales for to place an order for licenses, source sharing agreements, embedded or IoT device licensing, etc.

Any questions?

For small data, memcached RAM growth is dominated by the overhead of storing individual keys no matter how large the actual data. If you store 300 million integers in memcached, it takes 30 GB RAM due to bloated memcached overhead. If you store the same 300 million integers in Carrier Cache, you only need 3.83 GB.↩︎

Carrier DB is a superset of Carrier Cache.↩︎

when a zvm process yields, the zvm yield mechanism automatically locks all keys the zvm process was using (this is why having a specific key-value aware vm is useful). only the owning zvm process can unlock locked keys the next time it gets scheduled to run. if another process needs to access the locked key, it will automatically yield itself, which puts it behind the process with access to unlock and continue operating on the locked key(s) (which will hopefully finish processing and leave the key(s) unlocked for the next zvm process to pick up and start using regularly).↩︎

Carrier DB’s opcode format was inspired by SQLite’s VDBE. though, unlike VDBE, for efficiency, zvm commands are sometimes a mix of generic multi-purpose opcodes to prepare commands/locks/results and some very specific opcodes to run a whole command with one call (e.g. if the command has specialized complex flow control behavior anyway).

For example,

RPOPLPUSHuses custom opcodezopListRPOPLPUSHfor performance:createCommand drpCmdListRPOPLPUSH(zvmOpAllocation zoa) { /* Does src exist and is it a list? */ /* If source key doesn't exist, it's a user error. */ add(zopKeyExistsAsType, 0, 2, CARRIER_TYPE_LIST); add(zopError, ZVM_ERROR_KEY, CARRIER_ERR_KEY_NO_EXISTS); /* Is dst either a list or doesn't exist so we can create it? */ add(zopKeyExistsAsTypeOrCreate, 1, 2, CARRIER_TYPE_LIST); add(zopError, ZVM_ERROR_KEY, CARRIER_ERR_KEY_NOT_LIST); /* Preconditions met! Now run rotate. */ add(zopListRPOPLPUSH, 0, 1, false); }while a simpler command like

LPUSHcan use opcodes for each loop iteration which makes it more responsive to reductions (though, even specific opcodes likeRPOPLPUSHcan still bump their reduction counts internal to one function which allows intra-opcode yield/resume too):↩︎createCommand drpCmdListLPUSH(zvmOpAllocation zoa) { /* Does src exist and is it a list? */ /* If source key doesn't exist, create it as a list. */ add(zopKeyExistsAsTypeOrCreate, 0, 2, CARRIER_TYPE_LIST); add(zopError, ZVM_ERROR_KEY, CARRIER_ERR_KEY_NOT_LIST); /* Store the index of the next parsed value to process in arg 0. * When done iterating, jump +3 instructions ahead. */ add(zopForEachValue, 0, 3); /* Index of key, index of argument to use to retrieve value: */ /* true == push to head */ add(zopListPush, 0, 0, true); /* Loop again */ add(zopJumpOffset, -2LL); /* Result is total elements in list */ add(zopListCount); }(CDBMAMEDSITW—catchy!)↩︎

multilist is a complete rewrite and massive improvement to my original 2014 work on improving redis list performance through flat buffer unrolling. since then, i abandoned the entire redis ‘ziplist’ thing and wrote a new flat buffer from the ground up (plus, my new implementation is the world’s most memory efficient dontchaknow).

if you’re curious about my original attempt at flat buffer unrolling, see these posts from 2014: Redis Quicklist and Quicklist: Visions and Revisions↩︎

the single micro-architecture dispatcher binary is the only binary we distribute not compiled to a hardware optimization level or else it wouldn’t start for some users. hardware-optimizing the launcher binary wrapper would defeat the entire purpose of having it exist in the first place.↩︎

if you are spending dozens of billions of dollars on RAM please do give me a call↩︎

The reason for two products is many users only need GET/SET/ADD/REPLACE, so legacy memcached commands are a perfect fit. Also, since memcached doesn’t have data types (everything is a string or string-integer-string conversions), the data storage layer doesn’t have to store a datatype per key, so there’s a slight memory savings with Carrier Cache over Carrier DB because Cache can avoid extra “what type is this” bytes for each key. Also also, legacy memcached protocol is designed for concurrent replies in a way legacy redis protocol can’t match, so Carrier Cache (or Carrier DB in legacy memcached mode) can be higher performance when there are many tiny requests in-flight waiting for replies.↩︎