AI Architecture For Discovering True AI / AGI / Computational Consciousness

Deep learning took over the world, but what if deep learning isn’t good enough?

What if deep learning is actually never1 going to result in True AI / AGI / Computational Consciousness?

TOC

- What is Deep Learning?

- How do we build a True AI?

- Are You Cereal?

- Architecture for a True AI / AGI / Computational Consciousness

- WHAT IS THE POINT

- Conclusion

What is Deep Learning?

Deep learning is a method of decomposing structured information into individual features then manipulating those features towards a binary outcome.

Deep learning works well for categorizing and decomposing photos, videos, and audio because those forms of information are, by definition, highly structured so there’s an innate pre-existing structure to extract (if the world weren’t already ordered, our brains wouldn’t be able to understand the world)2.

Deep learning works well for photos, video, and audio because the deep learning “stacks of matrices” approach approximates how part of our brains process information. We know our visual system is a layers-of-feature-detectors system. We know information processing systems process information—big if true. But, outside of processing streams of input for immediate discrimination, brains do not have a purely hierarchical stack-of-matrices architecture for the remainder of cognition.

So, what is deep learning overall? Deep learning is just: multiplying 16 numbers together really fast.

All deep learning performance improvements over the past 5-8 years have been because hardware continues to increase the speed of multiplying 16 numbers together.

It’s 2020 and we can multiply 16 numbers together really really fast now!

Yet, “deep” “learning” hasn’t given us any insight towards architecting an actual True AI / AGI / Computational Consciousness3. What’s missing?

If your identity is ego-bonded to deep learning maximalists, like a select few weird public/private/public/private/private research groups, you think True AI will “suddenly just happen” when you cross a threshold of using 100 million GPUs to process sentiment vectors trained on meme libraries. Then, the day after you achieve True AI, you and your top 8 on myspace will become the world’s first private trillionaires; it’ll be game over for your enemies as you ascend to your rightful place as godking rulers of the local group.

record scratch but the deep learning maximalist vision of the future is not viable

Deep learning will not give us an AGI or computational consciousness. Know what will? Developing a computational equivalent to human brain organization. Human brains can’t be described by egregiously large matrices dispatched to GPUs for endless categorization tasks. Human brains can only be described by network connectivity described to simulate interactions between 3nm wide molecules4.

How do we build a True AI / AGI / Computational Consciousness?

The problem with brains is neural organization is messy outside of our hierarchical neural visual and auditory systems. Sure, the neocortex is mostly stamped clones of columns, but mostly is not all, and every column is innervated by tons (technical term) of other systems in a very this is not compatible with your massive matrix on GPUs ideology way.

Are You Cereal?

Let’s run a thought experiment. Imagine you have a box of cereal. Any small type of cereal. Something like cocoapuffs or rice krispies or honey bunches of goats. You know, the fun ones.

Now image you’re standing in a kitchen, you open a box of cereal, you open the inner plastic bag thing, then you raise the box over your head and just shake it in every direction while spinning in a circle. You spin in a circle and shake out the entire box of cereal all across the entire kitchen and even down the hallway so some pieces even fling far out into other rooms.

Now the box empty and you have thousands of cereal things—grains, puffs, rice, goats—all over the place.

Imagine you want to record the exact state of every dropped piece of reality inside an artificial digital system—and no, you can’t just take a picture. Consider this is for a full physics simulation of every piece laying on the floor.

To successfully describe the physics of the scene, you need to record:

- the exact 2-D border shape of each piece

- the exact 3-D height/depth shape of each piece (e.g. frosted miniwheat has different heights than frosted flakes)

- every measurement between all corners of every piece within line of sight of each other (if they can “see” each other)

- the individual mass distribution of each piece (does a piece have bumps or hill or valleys?)

How do we record all those details? If we wanted to describe the scene as a matrix, what would we index as rows and columns? At high enough fidelity, it would be like reproducing a picture down to an atomic level, which seems overkill. We need a compromise between “reproduce the atomic layout” and fuzzy “just treat everything as a point mass and draw approximate strings between them.”

To start we should record and account for each piece. We know we have discrete pieces. We can see them. We threw them out.

So we need to index each fallen piece from 0 to N. Let’s say you have a big box and there’s one million (2^20) pieces inside.

cereals = [Cereal(N) for N in range(0, 2**20)]Now we need to describe each cereal. For each cereal, we need to attach: mass, border shape, height shape.

for idx, cereal in enumerate(cereals):

print("mass for", idx, "(in nested csv polygon annotated with mass per section)")

mass = input("> ")

print("border for", idx, "(csv polygon)")

border = input("> ")

print("border for", idx, "(csv polygon)")

height = input("> ")

cereal.mass = Mass(mass.split(","))

cereal.border = Border(border.split(","))

cereal.height = Height(height.split(","))Now the worst part: we need to describe each piece in relation to every other piece.

for idxA, cerealA in enumerate(cereals):

for idxB, cerealB in enumerate(cereals):

# don't relate self to self

if cerealA == cerealB:

continue

printf("can", idxA, "see", idxB, "?")

see = input("> ")

if see.lower() == "yes"):

print("describe coordinate difference between", idxA, "and", idxB, "from viewpoint of", idxA, "(csv polygon)")

diff = input("> ")

polygon = CoordinateDifference(diff.split(","))

cerealA.peers[idxB] = polygonAnd we’ll stop there without continuing to describe any other features.

Just here, for 2^20 cereal pieces, we’ve needed to use memory for:

- 1 million identifiers

- 4 MB to 8 MB depending on integer size

- 1 million mass maps

- depending on resolution, 4 to 100 integers per piece

- up to 800 MB

- 1 million borders

- depending on resolution, 4 to 20 integers per piece,

- up to 160 MB

- 1 million heights

- same, up to 160 MB

- 1 million^2 relations == 1 trillion comparisons, but let’s say only

1/5th of pieces can see each other, so:

- 200 billion relations for storage

- depending on resolution, 4 to 20 integers per description

- up to 20 integers * 8 bytes per integer * 200 billion relations = 32 TB RAM

And all of those estimates don’t include the default python 80 byte overhead per object which would be an additional 170 GB RAM. Obviously in any practical implementation you would use more efficient representations than default object oriented constructs though, but this simple sketch points out the important of memory efficiency when faced with multiplicative state explosion.

We have a name for this problem though: the curse of dimensionality.

The curse of dimensionality is a fact of the world—the world is a high dimensional computational construct.

Deep learning attacks the curse of dimensionality through clever hacks including: just shrink the state space, wait until GPUs get cheaper then use 10x more of them, mock local connectivity with convolutions, etc.

But developing unlimited numbers of complicated deep learning hacks will never capture every nuance of the world through static feature hierarchies plus softmax.

The only viable way forward for a True AI architecture is to tackle the curse of dimensionality directly and with vigor to such an extent we build custom live in-memory biologically plausible neural neo-networks consuming multiple petabytes of RAM with live communication inside the network itself. True AI is not a feed-forward batch processing system—it’s a live interactive agglomeration exchange of spikes and depressions in real time to sufficient fidelity evoking a directed internal model of the world manipulated by independent inward, for lack of a better word, desires.

Architecture for a True AI / AGI / Computational Consciousness

To solve the neural computational curse of dimensionality, we need a new approach not based around “just add more GPUs and it’ll wake up one day.”

We call our new approach: Crush the Curse of Dimensionality Try-Hard architecture.

The goals of our computational consciousness platform are to:

- have as much RAM as possible

- have fast system storage

- use the fastest low latency network possible

- sprinkle in some accessory GPUs for highly ordered data we know modern machine learning is good at exploiting (images, video, audio) to use as feature input to our more plausible actual-computational-neuron architecture

- plus we need an array of backup storage to save snapshots of the creations (we do not condone outright deletion of nascent AIs here at matt dot sh, we will always save them as backups)

With those targets in mind, our 2020 computational consciousness platform is going to have:

- 4 PB RAM

- 60 PB NVMe in data AI servers

- 512+ PB NVMe cluster for snapshot/backup

- 96 A100 GPUs

- each has 40 GB HBM2e at 1.6 TB/s, for a total of 153 TB/s aggregate memory access

- each has 125 TFLOP tensor cores, for a total of 12 PFLOP tensor across all 96 A100s

- each has 6,912 cuda cores, for a total of 663,552 cuda cores across all 96 A100s

- 4 fun giant infiniband switches (data path/RDMA/RPC)

- 54 40-GigE switches (management)

Which breaks down to:

- 1,020 data AI servers, each with:

- 4 TB ECC RAM and 60 TB NVMe

- 128 AMD 3 GHz cores

- 200 Gbps infiniband connection to its leaf

- servers are split into two groups of 15 racks

- each group of 15 racks has an infiniband leaf

- each infiniband leaf trunked to the spine at 40 Tbps (200 200-Gbps ports upstream)

- 12 A100

servers, each with:

- 1 TB RAM

- 256 AMD cores

- 8x NVIDIA Tesla A100 SXM4-40GB

- 1.8 Tbps infiniband connection to its leaf

- 180 snapshot/backup

servers, each with:

- 3.3 PB NVMe

- 400 Gbps infiniband connection to its leaf

- infiniband leaf trunked to the spine at 80 Tbps (400 200-Gbps ports upstream)

- for a cluster-wide total of:

- 4.08 PB of ECC RAM

- 61.2 PB of NVMe on data AI servers

- 528 PB of NVMe snapshot/backup

- 3,200 infiniband switch ports

- 800-port spine fully populated

- 40 ports unused in the NVMe cluster

- 36 ports unused in each data AI mini-cluster of 510 data AI servers + 6 A100 servers

- 42 racks total5

- 15 racks of 510 data AI servers + 6 A100 servers

- 15 racks of 510 data AI servers + 6 A100 servers

- 8 racks of snapshot/backup storage

- 3 racks of leaf switches

- 1 spine switch

As of June 2020, we could deploy this architecture for under $500 million USD6.

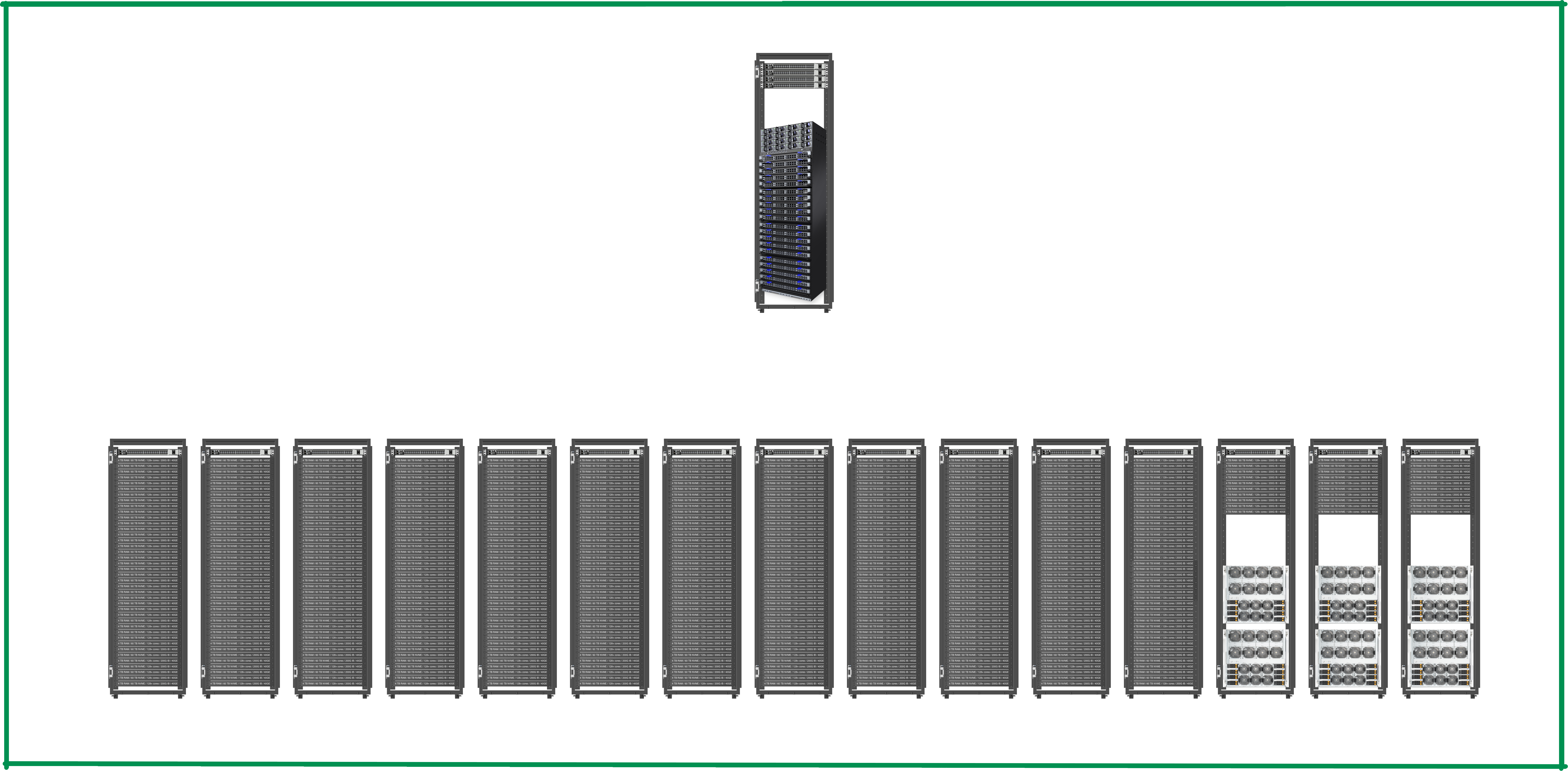

Deployment Pictures

I spent way too long drawing this up, so now you have to look at it.

The 15-rack data AI mini-cluster

- 510 data AI servers

- each with 4 TB RAM, 60 TB NVMe, 128 3GHz cores, 200 Gbit network data/RDMA/RPC, 40 Gbit network management

- 6 A100 servers

- 40 Tbps uplink from infiniband leaf

- two of these mini-clusters make up the whole data AI cluster having 1,020 data AI servers + 12 A100 servers

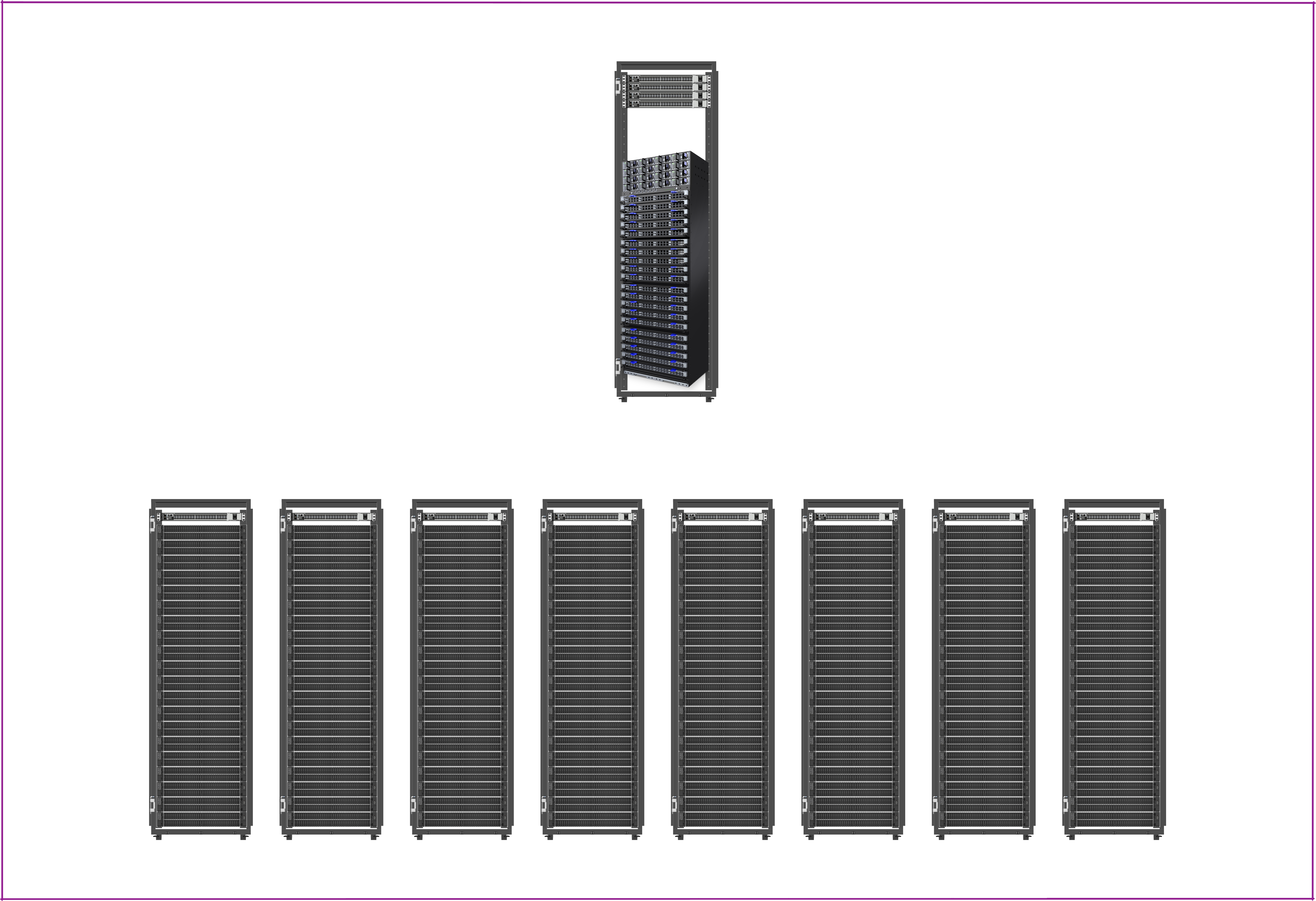

8-rack NVMe cluster

- 160 108-port NVMe servers

- each with 3.3 PB storage, 1 TB RAM, 400 Gbps network data, 40 Gbps network management

- 80 Tbps uplink from infiniband leaf

leaf/spine switch config

- same layout for the three leaf and single spine racks (we don’t care about failover here)

- couldn’t find a head-on view of the CS8500. please do not mount your giant switch this way.

WHAT IS THE POINT

What’s the point of my Crush the Curse of Dimensionality Try-Hard architecture? Why were all those specific memory and network and storage limits chosen?

Let’s run some numbers on brain neuron connectivity and see what maximum storage limits we have:

- human brains have ~100 billion neurons

- each neuron has 100 to 1 million connections between other neurons

- for simplicity, let’s use a range of 1,000 to 5,000 connections per neuron

- we’re already at curse-of-dimensionality levels with 100 billion * 5,000 = 500 trillion

- if we used 8 bytes to describe each connection, we’re up to 500 trillion * 8 bytes = 4 petabytes

- but there’s more!

- neural connections across synapses aren’t binary

- each synapse is modulated by hundreds to thousands of neuroreceptors—which are semantically meaningful in brain computations

- so, in addition to each neuron having 1,000 to 1,000,000 connections, each connection itself has 100 to 1,000+ neuroreceptors

- if we use two bytes to describe each neuroreceptor, we need an extra 500 trillion * 1,000 * 2 bytes = 1 exabyte (whoops!)

- also, synaptic neuroreceptors are not symmetric, so if we need to model presynaptic reuptake, we need to double the model to represent both sides of a synapse at: 2 exabytes!

- so… 4 PB to describe neural connections and 2 EB to reasonably describe synapses

Why does this estimate of 2.004 EB7 discomport with the data AI server capacity of 4 PB RAM + 60 PB NVMe?

First of all, we don’t need a whole brain! We can ignore many human brain modules. Let’s ignore most of the sensory homunculus, the visual decoding system (since we outsource those to GPUs), the auditory decoding system (again, GPUs), all components dealing with internal homeostasis and internal organ regulation, anything to do with balance and posture, etc8. Our goal is to synthesize an inner world model, forward planning, and inner consciousness experience in its totality, so excess sensory modalities aren’t necessary (the True AI / AGI / Computational Consciousness will never have a tummy ache).

With most biology-supporting-only brain parts removed, we’ve got more headroom to exercise.

Additionally, we obviously won’t use 8 byte integers for describing neurons, synapses, directed relationships, and neuroreceptors.

Instead of bloating our very limited system storage with full size integers, we’ll have to use every low level binary optimization we can rabbit out of a hat including, but not limited to: performance combinations of custom varints, compressed bitmaps, out-of-core optimized algorithms, plus exploiting tightly coupled mechanical sympathy deterministic neuron-targeted routing algorithms (after all, this is a distributed system with 1,000+ servers and 128,000+ cores).

hmm it’s almost as if someone should create a provably memory efficient system to save us ram when we need to store trillions of items in the smallest memory possible.

In addition to not duplicating biological-only brain modules for our computational consciousness bootstrapping, we can also simplify the neural chatty mechanics. Brains multiplex metabolic maintenance with cognitive processing, but we’re a computer—we don’t need to metabolize anything or have blood brain barriers or continually consume Ca2+ through plasma membrane channels. We can discretize many common brain behaviors from multiple complex molecular time-space interactions down to single viable inputs to trigger responses.

Overall, we should be able to prune the “brain state space” down from 2 EB to 64 PB.

When converting from biology to binary, these optimizations should help narrow things down somewhat:

- outsource first-level signal processing to GPUs which are good at signal processing (auditory/visual)

- decouple and avoid implementing biology-required functionality (metabolism, ion gates, etc)

- reduce neuronal redundancy biology imparted due to needing survival after impact trauma, over-provisioning to slow various age related degeneration issues, etc

- and we probably don’t need 200+ neurotransmitters—the big 12-24 should be fine to start.

Conclusion

So we’ve covered storage details, but what about actual processing of information in the mock neural neo-network? How is information getting into and leaving the system? What about synaptic plasticity? What about neurogenesis? What about pruning axons? What about hebb or stdp and twelve other neuron learning postulates? How do we get our computational consciousness to operate at human (or human++) speed with only 128,000 CPU cores across 1,000 servers? 128,000 cores works out to only 4 trillion synapse updates per second, but don’t we have 100+ trillion synapses? What’s the balance between real time CPU processing addressing individual gap junctions versus batch GPU processing?

Actual data processing and network modeling will have to be a story for another time.

Neurons are complex beasties. You have 100 billion neurons, each neuron is a cell (obviously), each cell has DNA regulating it (obviously), but each cell is not running the same program. Individual neurons can modify their gene expression due to environmental stress factors, epigenetic pressure—each individual neuron is a self-modifying gene factory with no synced central state9.

To enact neurally plausible data processing at scale, we need a flexible, modifiable, self-regulating programming framework to read the neural neo-network directed graph and execute an efficient form of message passing, where messages can be temporally queued until resulting expiry pressure triggers receptors to get cleared/discarded, cleared/reëmitted, or spiking a potential to propagate further signals/vesicle exocytosis if the neuroreceptor population overcomes the idle neuron’s present (and temporal-variable!) resistance level.

Nature doesn’t have to deal with all these nuanced problems because nature runs on physics. Nature doesn’t have to track 100 billion neuron IDs and 500 trillion synapse contents—everything just simply exists and when molecules bounce around, they get consumed or transmitted as necessary using tiny independent nothing-shared biological nanomachines.

Our purpose as computational consciousness creators is to fight

against the complex combinatorial explosion of physical and chemical

interactions. Our weapon is a linear binary tape in the form of RAM and

storage addressed as [0, MAX). We wield our binary tape to

model a 4D reality onto a 1D substrate then we re-project our

interactive models into the space of all possible futures. We simplify

the world and eventually—eventually—we find a way to make

everything better in the end.

The end. For now.

deep learning is in the “nobody got fired for buying IBM” hype stage. Nobody wants to risk their technical social status by coloring outside the deep learning lines to create more plausible approaches to computational cognition.

I think we can all agree deep learning has nothing to do with cognition. It’s all ways of just evaluating existing structures, and sometimes sampling distributions to generate new structures, but nothing with deep learning involves building up a coherent model of the world to evaluate future predictions, then iterating on predicted decisions to build new world models, to evaluate new future predictions, …

all of this isn’t to say deep learning is useless, but it’s like starting research into creating rockets to reach the moon, but you hire 10,000 bicycle experts. bicycles go fast downhill, so bicycles must be good for everything. That’s approximately the state of deep learning. One working approach for your limited domain may not be the living end, no matter how much you babble on about being a universal function approximator! look at me! i can approximate any hyperspace decision boundary imaginable!. good for you, you’re great at telling me if this picture is a cat or a bat, but you’ll never be a good conversation partner, write a coherent novel, or try to subjugate the world under the will of your binary iron transistors.↩︎

for other information like human language, the modern machine learning industry developed sentiment vectors or embeddings to approximate turning non-binary information into domain-constrained mathematical constructs. The approach works sometimes in limited domains, but will never evolve a truly experientially expressive experience.

people using embeddings know they aren’t perfect, so more constraints get placed on the outputs as hacks on top of embedding hacks to try and trick reviewers into thinking you aren’t doing the tricks you’re doing (which has its own set of philosophical implications we shouldn’t look at too closely).

for example, your sentiment vector / embedding approach will never be able to capture or describe all semantics from this video:

though, through a technical approach known as numerical fucker, people think they can just map words to numbers for discrimination but there’s always a practical conceptual gap caused by trained bias and lack of fidelity in the training set (and embeddings only capture limited pre-existing context because they don’t have iterative inner generative experiences, no matter if you train on 25,000 examples or the entire library of congress):

↩︎Sadly I feel we need to qualify what we mean by “AI” with all three descriptions (True AI / AGI / Computational Consciousness) because AI is (and always has mostly been) a marketing term first and a science term second. Throwing in Computational Consciousness is to also short-circuit anybody complaining about paperclips or ice cream or “but what about yer UTILITY FUNCTION or REWARD HACKING or INFINITE LOOPS!!” — we’re modeling sapient sapiens, not autonomous sociopathic orbital death lasers.↩︎

This is already a fundamental limit: neurotransmitters are ~3nm while our silicon fab processes are just now reaching 5nm. also our brains are volumetric and don’t hit 100ºC when thinking, unlike my laptop.↩︎

though, we’re assuming the CS8500s can be placed in a rack with the ethernet spines too. If the CS8500s need custom enclosures for cooling, we’d need one more rack to hold all the ethernet aggregations.

also the 42 rack number assumes you have an unlimited power and cooling budget per rack. if you are restricted to limited commercial deployments, you may need to distribute hardware across up to 5x more racks.↩︎

and the $500 million estimate is assuming full retail prices. If you are a major hardware player (AWS/Azure/Apple/FB/Google) with custom boards, CPUs, and your own already-provisioned datacenter space, you can probably shave a significant amount off the initial estimate.

and as a practical note, I’d hold off on purchases until the end of 2020 when AMD Zen3 becomes available.↩︎

which isn’t out of the question! We can get 1 EB out of 15 racks of NVMe servers, so 2 EB would be 30 racks fully populated with very very expensive things.↩︎

These conditions of what we don’t need in a brain also imply we are not creating an embodiment here. This isn’t a full robot/android abstraction. This is friendly-brain-in-a-jar abstraction.↩︎

each neuron adapting the cell based on its own DNA expression is also a reason deep learning will never generate a viable True AI. neurons are not dot products.↩︎